By: Alex Likholyot

Updated: March 2, 2021

These days 3D tours are widely used for visualizing and marketing indoor spaces. They are also used to provide detailed space documentation such as floor plans and measurements.

Anyone looking at getting into making 3D tours is likely overwhelmed by the number of technological offerings from many different vendors and may have a hard time choosing which one to use.

This review provides a comparison of the most popular methods and products for capturing property data and creating 3D tours. It discusses advantages and weaknesses of different types of cameras and equipment. I will be drawing on my previous experience developing optical metrology systems and more recently designing one of the discussed technologies (iGUIDE).

For the most part, this discussion will focus on 3D tours of real physical spaces and not computer-generated 3D environments.

What is a 3D Tour?

Most often, a 3D tour is a series of 360° images, also called photospheres, panoramas, or panos, where a user can navigate from one 360° image to another. There is an opinion that simply using 360° images without an underlying 3D mesh, or 3D point cloud collected by a camera, is not enough for such a presentation to be called a 3D tour, so an explanation is needed.

The term 3D, shorthand for three dimensional, means having three degrees of freedom in which a user can move. In each 360° image a user can look left-right and up-down and these degrees of freedom add up to two dimensions. In addition, a user can move between 360° images, zooming in and out of the images during such transitions. This degree of freedom adds yet another dimension, resulting in the experience of moving through 3D space. Because of that, the term “3D tour” is well justified for tours made using connected 360° images.

Another way to show a 3D tour is to use a 3D mesh with image textures applied to it. This approach is generally not used in a web browser for rendering real physical spaces captured with a camera, due to the high complexity of 3D mesh for the limited resources of a web browser. However, computer-generated 3D environments are often rendered in web browsers using the textured 3D mesh approach because in such cases the 3D mesh is a lot simpler.

The representative 3D tour technologies we will examine in this article are produced by iGUIDE, Matterport, Leica, Occipital, Cupix, InsideMaps, Ricoh, Insta360, Immoviewer, Zillow, Asteroom, EyeSpy360, Metareal, and Vpix360.

Navigation in 3D Tours

When viewing a 360° image, a user can look all the way around and up and down. They can also zoom in and out. When it comes to navigating to another 360° image, there are a few different options available.

360° Image Navigation

Nearly all 3D tours allow navigation through 3D space by clicking in 360° images on some kind of a hotspot or simply in a desired direction of movement. The 3D tour then renders a transition to the next location simulating movement in the 3D space.

This type of simulated movement doesn’t render in an entirely realistic way and even in cases where an underlying 3D mesh is available, the transitions often exhibit noticeable unrealistic artifacts. Nevertheless, such imperfections have a relatively small effect on a user, because during the transition the human brain processes data mostly to assess speed and direction of motion, rather than details of the surrounding 3D space.

Image courtesy of Zillow.

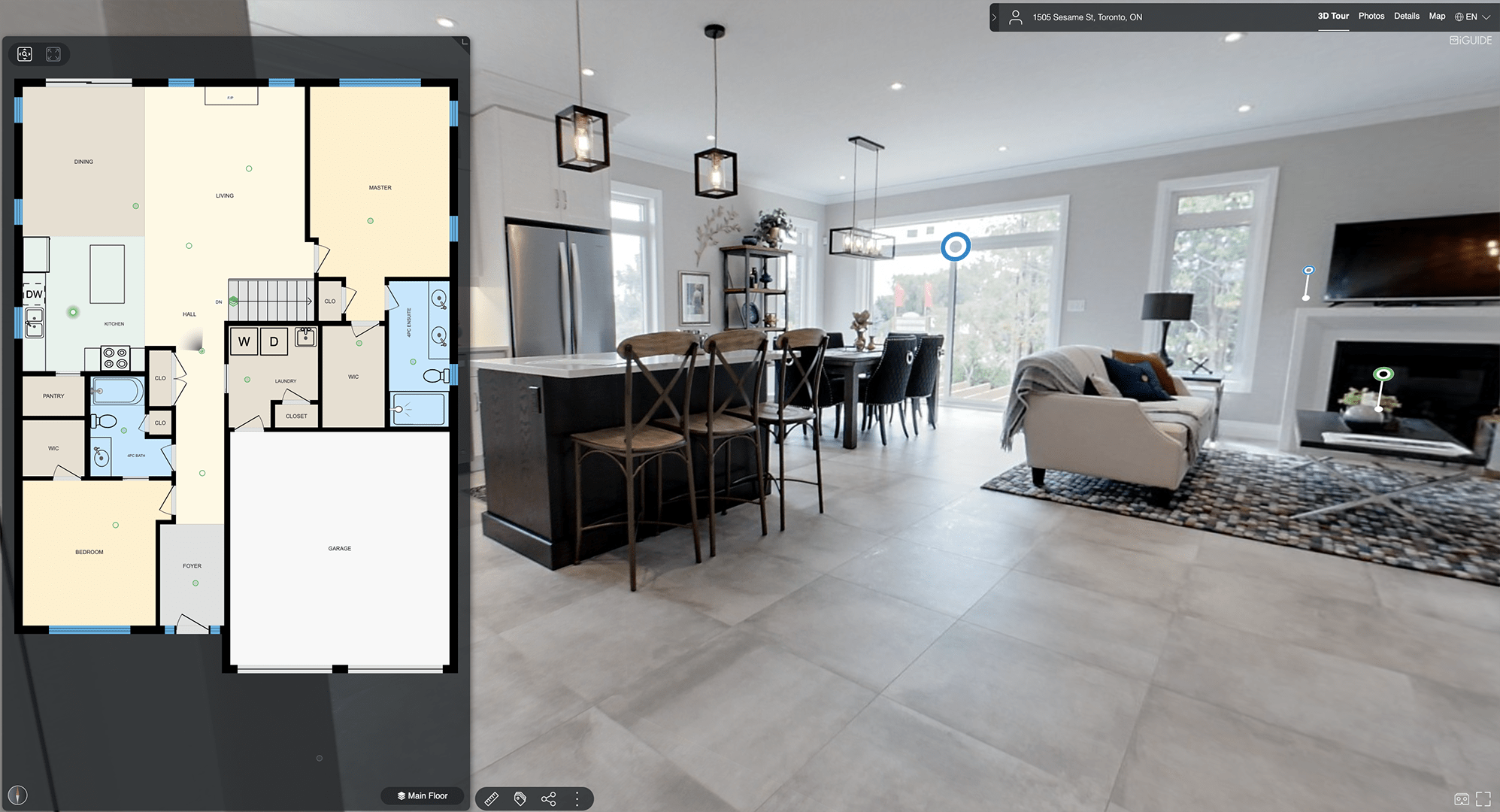

Floor Plan Navigation and Location Tracking

Without some kind of a map as a reference, it can be very difficult to make sense of a floor layout and after a couple of 360° image transitions the user can find themselves lost in space and unsure of where they are going or have been.

This is where showing a floor plan or a map alongside the 360° image is very helpful and improves the user experience, as the user’s current position and viewing direction can be displayed on the floor plan, providing constant location tracking.

Simply clicking on a floor plan can allow instant teleportation to another location across the floor, providing for a very efficient and intuitive navigation which is possible only with a computing device. This is opposite to the 360° image navigation that attempts to simulate a real life walking experience and requires a lot of mouse clicks or “steps” to move across the floor.

Floor plan or map navigation is used in very few 3D tours, notably in iGUIDE, Cupix, InsideMaps, and Google Street View. In those tours it is used in addition to 360° image navigation, giving users the option to choose how to explore the space.

Image courtesy of iGUIDE.

Dollhouse Navigation

Some 3D tours feature a 3D dollhouse, such as those produced by Matterport. The dollhouse can be rotated in space to get an idea of property layout for all floors at once. It is possible to navigate using the dollhouse, but is not very practical given the number of clicks required to enter the dollhouse mode, rotate the dollhouse, zoom in or out, and finally select a new location. It is definitely quicker than using the 360° image walking-type navigation, but certainly not as efficient as using floor plan navigation.

Image courtesy of Matterport.

Capture Equipment for 3D Tours

Since it is impossible to capture a 360° image with one lens and in one shot, either several lenses or several shots with a single lens, rotated each time, or a combination of both are used. Several resulting source images are then stitched together to produce a 360° image.

When using several lenses, the 360° images can exhibit stitching artifacts for objects close to the camera due to parallax errors, because the same objects are viewed from different locations by different lenses. When shooting indoors, more often than not, there will be objects in a scene that are close to the camera, so stitching artifacts most often happen when capturing images inside buildings.

To see what a parallax error looks like, hold one pen about a foot or 30 cm in front of you and a second pen two feet in front of you and close one eye at a time. You will see that the distant pen will be either to the left or to the right of the closest pen, depending on which eye is closed.

When using a single lens and rotating it several times, parallax errors can be practically avoided, if the lens is rotated around a special point called the entrance pupil, with the exact location depending on the lens design.

Besides the number of lenses and other design details, 3D tour capture technologies can also differ in whether they take any spatial measurements and which measurement principle is used. Several popular 3D tour capture technologies are discussed below.

360° Cameras

Among the many different 360° cameras, the most popular and supported ones are the Ricoh THETA and the Insta360. These cameras feature a slim two lens design which reduces, but does not completely eliminate, parallax errors, while cameras with more than two lenses will have higher parallax errors and more stitching artifacts.

Image courtesy of Ricoh.

The 360° images produced by 360° cameras can be used to build 3D tours through several software platforms, such as Cupix, Ricoh Tours, Matterport, Immoviewer, Zillow 3D Home, Asteroom, Metareal, and Vpix360. Because 360° images are captured in one shot, the onsite capture speed is rather fast with these cameras. However, there is a need to run and hide in another room when taking a 360° image, which does affect shooting time. Some 360° cameras support a two shot mode, where a photographer needs to step around the camera and take two shots for one 360° image.

The 360° cameras do not include any measurement technology. Nevertheless, images can be used for inferring 3D space structure and extracting floor plans, though with limited accuracy.

The 360° images from 360° cameras are softer than from DSLR cameras, especially around the edge of each of the two lenses. Nevertheless, since more than 50% of 3D tours are viewed on mobile devices and 3D tours on desktop aren’t often viewed full screen and on a large monitor, limited image resolution is not a big issue.

Small image sensors used in most 360° cameras do cause extra noise in images under low light conditions typical for indoor shooting, which can make the resulting 360° images appear flat and lack depth. The Ricoh THETA Z1 was able to address this issue by using larger image sensors.

DSLR Cameras

DSLR camera setups feature a wide angle or fisheye lens that is typically mounted in a way to avoid parallax errors when the camera is rotated into multiple positions to produce a 360° image. The number of positions required to produce a 360° image depends on a field of view of the lens and typically is four to twelve.

Image courtesy of Nodal Ninja.

There are several software packages that allow for stitching source images with varying degrees of speed and ease of use. Once the 360° images are produced, platforms like Cupix, Metareal, Asteroom, EyeSpy360 and a host of others can be used to turn them into 3D tours.

The capture speed with DSLRs is slower than with 360° cameras due to the need for multiple rotations. However, the photographer is always behind the camera and there is no need to hide in another room. Low light performance indoors is improved due to large image sensors used in DSLR cameras.

Just as with 360° cameras, DSLR setups do not include any measurement technology, but the images can be used for inferring 3D space structure and extracting floor plans.

Mobile Phones on Rotator

Mobile phones can be used with automated rotators to take several shots that are stitched into a 360° image. If the rotator and phone are compatible and with the correct setup, the phone can be rotated in a way to minimize parallax errors.

Due to phone lenses having a smaller field of view than lenses in 360° cameras, 12 to 18 shots are required for a single 360° image, but the resulting 360° image resolution is higher than with 360° cameras.

Usually, the phones are not rotated all the way up, so the resulting 360° image does not cover a complete photosphere and part of the ceiling area is missing.

Because of the required phone rotation, the onsite capture speed is much slower with phones as compared to 360° cameras, even though it takes a comparable number of camera positions on the floor.

The source images are processed into a 3D tour through software platforms, such as InsideMaps and these vendors also provide their custom motorized rotators that typically cost under $200.

Handheld Mobile Phones

Matterport, Zillow, and Occipital have apps for handheld smartphone capture where no rotator is needed. The capture is performed by taking multiple pictures in a smooth motion, like “painting” the space. Because of this handheld workflow, parallax errors are unavoidable and generated visuals have many stitching artifacts. This creates certain problems for 3D tours where the visual aspect of presentation is important and requirements for quality of 360° images are high.

Most mobile phones currently do not include any measurement technology, but the images can be used for inferring 3D space structure and extracting floor plans, just like with 360° cameras. However, recent models of iPhones and iPad include a 3D lidar which allows collection of 3D point clouds.

The capture speed with the handheld workflow is slower than with phones on rotators and much more slow than with 360° cameras. This also has to be considered in light of limited battery capacity in mobile devices, especially when intensive image processing is involved. In addition, the lidar range on the new iPhone is about 5 meters. Because of these constraints, capture with handheld devices is most applicable for small spaces like a room and these devices can hardly compete to be the most optimal tool for capturing a complete house and even less so when multiple houses per day need to be captured by a busy photographer.

Typical stitching artifacts in a 3D tour captured with iPhone with lidar.

Image courtesy of Occipital.

Matterport

Matterport’s 3D camera uses three lenses and is rotated into six positions with the resulting 18 source images stitched into one 360° image. Because of that optical setup, stitching artifacts can be visible for close-up objects in the image.

The Matterport camera also incorporates three 3D scanners. These scanners use structured light measurement principle, where a pattern of infrared light is projected outwards and viewed by an infrared camera to compute a 3D point cloud. The camera data is processed through the Matterport platform to produce a 3D tour which includes 3D mesh with the dollhouse and optional floor plans.

The need to collect overlapping 3D point clouds and produce a 3D mesh may require two to three times more camera positions compared to 360° cameras and the overall capture speed will, respectively, be slower.

Image courtesy of Matterport.

Leica

The Leica BLK360 Imaging Scanner includes several panoramic lenses and the resulting 360° images can exhibit stitching artifacts.

The BLK360 employs a time-of-flight 3D laser scanner (lidar) with the highest accuracy and longest range of all the systems discussed in this article. The system was designed for applications in construction and architecture and costs quite a bit more than the other discussed equipment.

Leica’s BLK360 software does not produce 3D tours, but the data can be processed through the Matterport Cloud, resulting in a 3D tour with the standard Matterport features.

The BLK360 can take up to three or even five minutes to collect each 360° image. However, due to the much longer range of the 3D scanner, not as many capture positions are required and the overall capture speed is generally comparable to that of the Matterport camera.

Image courtesy of Leica.

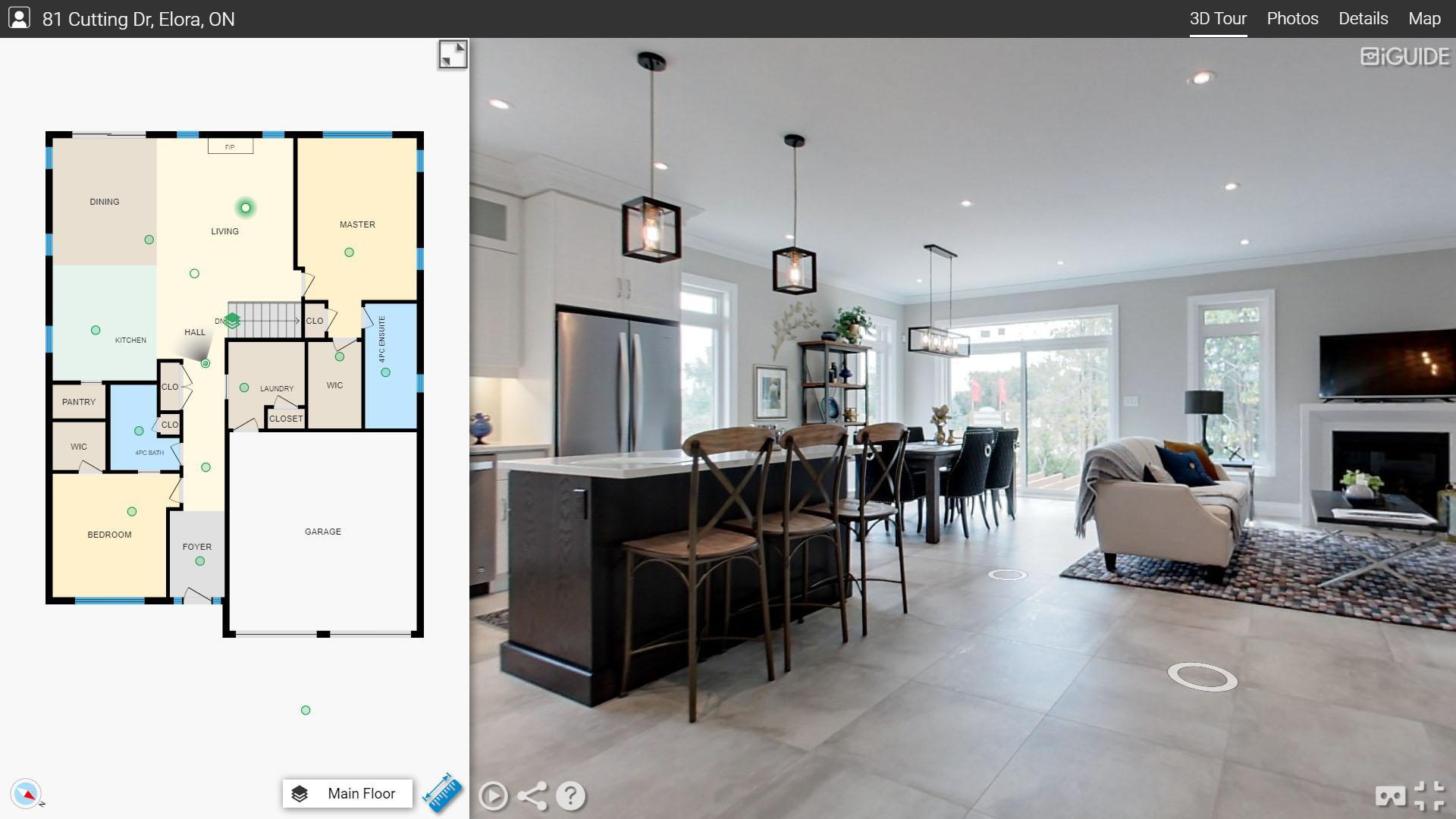

iGUIDE

The iGUIDE PLANIX camera system by Planitar features a 360° camera combined with a time-of-flight 2D laser scanner with a long range. The combined 360° imagery and 2D laser point cloud data allow for not only constructing accurate floor plans, but also making measurements in 3D space without having a 3D mesh.

The camera data is processed through the iGUIDE platform to produce a 3D tour with floor plans and property measurements.

Due to the long range of the lidar, the iGUIDE camera needs fewer camera positions to collect the necessary data and its onsite capture speed is about the same as with 360° cameras while at the same time providing much more accurate measurement data for constructing floor plans. A 3000 sq ft home can be captured in 15 minutes.

Image courtesy of iGUIDE.

Measurements in 3D Tours

Here we will compare different methods that are used to extract space structure and measurements from data that was captured by various technologies. The space structure can be used to create floor plans, which greatly enhance the 3D tour experience, as was already discussed.

Public perception of floor plans is that they are engineering drawings that are produced as a result of accurate measurements, so it will be important to look at the relative measurement accuracy of various methods.

3D Structure from Images

The 3D structure of rooms can be extracted from regular and 360° images using artificial intelligence (AI) or human processing. With some independent measurement information, such as camera tripod height or ceiling height, scaled floor plans can then be produced from that 3D structure.

InsideMaps, Cupix, Zillow 3D Home, and Matterport for 360° cameras and handheld smartphone capture are the technologies where such processing is or could be used. The measurements taken on such floor plans can have up to 4-8% error or uncertainty.

Structured Light

Structured light is used by the Matterport 3D camera and Occipital 3D scanner. That technology collects 3D point cloud directly and floor plans can be easily produced from that data. Typical errors or uncertainty on such floor plans is around 1%, but attaining that requires close spacing between scan positions.

Lidar

Lidar-based systems, such as the Leica BLK360, Apple iPhones with lidar, and the iGUIDE cameras use time-of-flight laser measurement and produce accurate laser point clouds – a 3D point cloud with Leica or iPhone and a 2D point cloud with iGUIDE. Floor plans created from such data have the highest accuracy of all the methods described in this review, with 0.1% uncertainty for Leica and 0.5% uncertainty for iGUIDE, while iPhones with lidar are reported to have 1% uncertainty (in the absence of published specifications by Apple).

There is a little more to be said about measurement accuracy of lidar vs structured light 3D scanner or 3D structure from images technologies. Time-of-flight laser measurement error does not depend on the distance measured, it is always constant. It means that no matter how large or small a room is, its measured size may be off by the same fixed amount, say one inch. It is only when several rooms are stacked together when measuring a floor, the overall floor plan error may somewhat grow with the size of the floor, mostly depending on the number of rooms.

For structured light and structure from image measurements, the error grows fast with measured distance. The math says, for example, that if a 10 foot room is measured using just one camera position and the measurement error is 1 inch with one of those technologies, then for a 20 foot room measured with one camera position the error will be 4 inches. In order to control and reduce that runaway error, more camera positions are needed in each room, with closer spacing between them. That increases shooting time and reduces onsite capture speed.

Square Footage Accuracy

When property square footage is computed from floor plans, the resulting percentage error in square footage is twice the percentage error in the floor plan measurements. So, structured light systems will typically have a 2% error in square footage and lidar systems will be well below 2% with lots of headroom.

The 2% level is important, because it is the maximum allowable square footage error in several property measurement standards, like the BOMA standards for commercial real estate or the Alberta Residential Measurement Standard.

Conclusion

There is a broad range of technologies that can be used for creating 3D tours all with varying degrees of accuracy, ease of use, and cost. We looked at the most popular ones in the North American marketplace and discussed their most important features.

When choosing a 3D tour technology, each particular use case may have different requirements and constraints. In the most common scenarios, speed of capture and availability of floor plan navigation would seem to be the most desirable features for a tour producer with all else being equal. In addition, according to both the National Association of Realtors® (NAR) and Zillow studies, over 80% of home buyers consider floor plans important or very important.

Understanding the public expectation that presented floor plans are a result of precise measurement and with property measurement standards requirements becoming more common in the industry, the measurement accuracy of a 3D tour technology becomes just as important as capture speed.

About the Author

Alex Likholyot is co-founder and CEO of Planitar, the company behind the iGUIDE technology. Alex holds a PhD degree in physics and spent many years developing optical measurement systems in industrial and medical metrology fields before applying that expertise to the proptech industry.